- Published on

Unlocking the Power of LLMs A Deep Dive into RAG Systems for Retrieval Augmented Generation

- Authors

- Name

- Adil ABBADI

Introduction

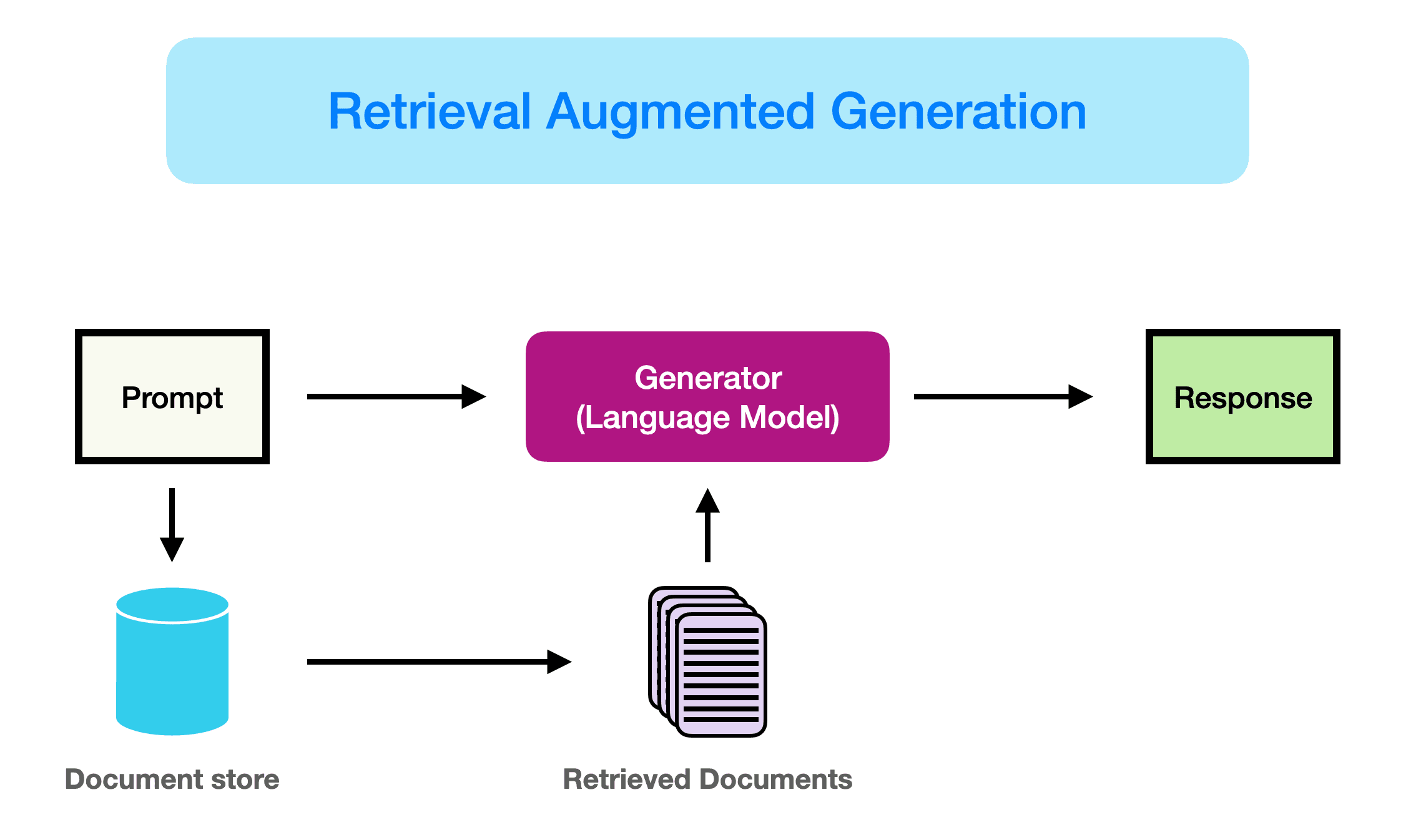

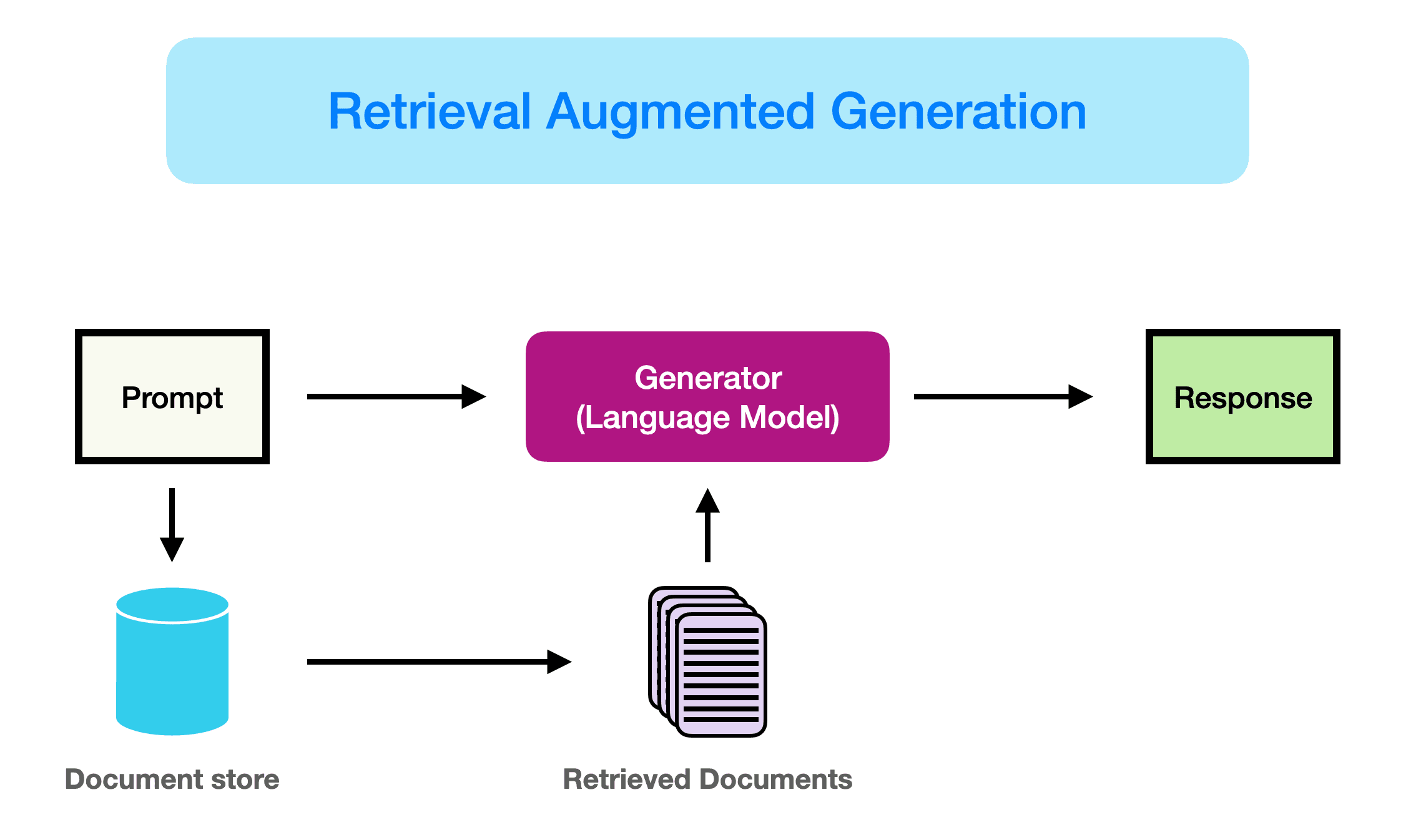

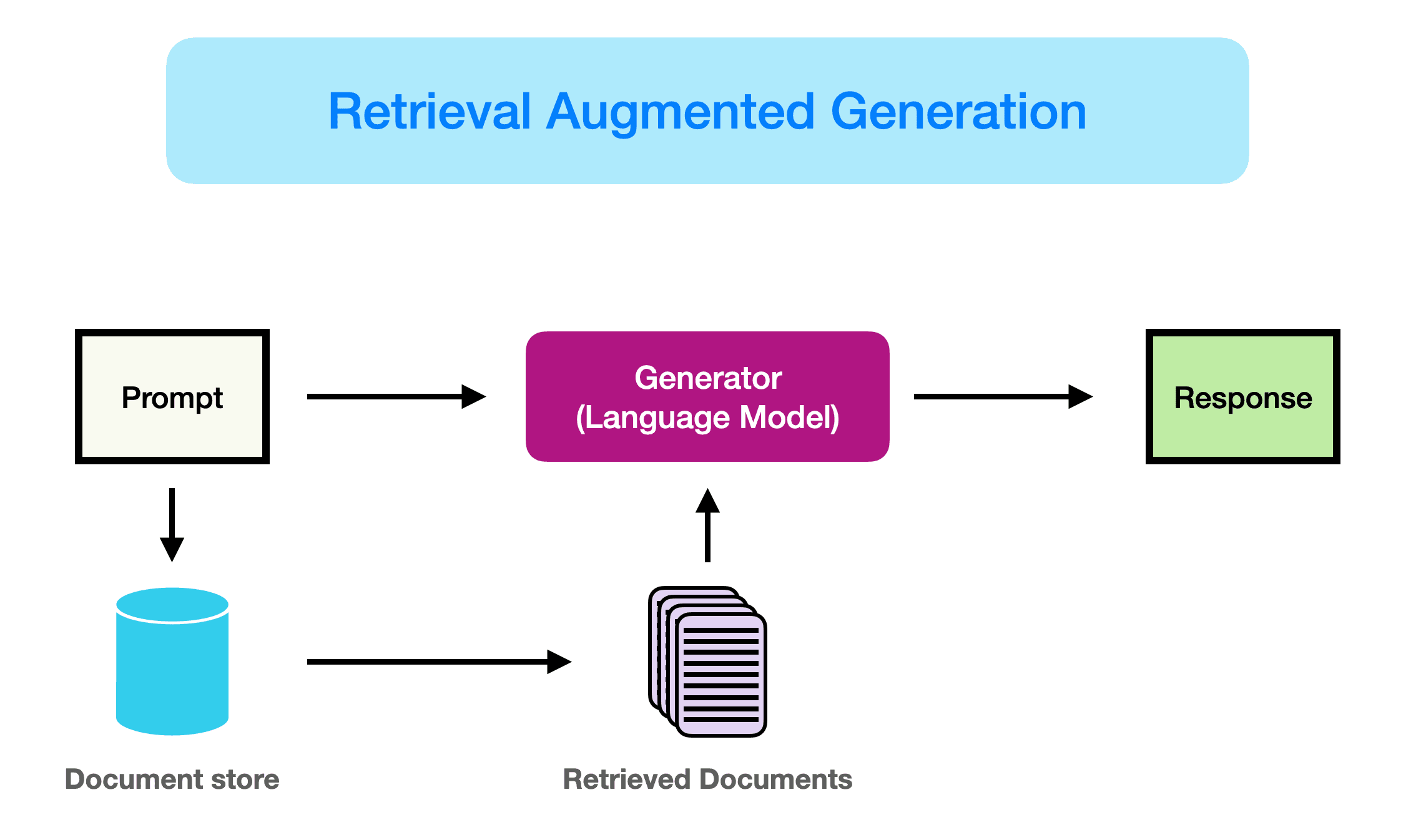

The advent of Large Language Models (LLMs) has revolutionized the field of Natural Language Processing (NLP). These models have demonstrated unparalleled proficiency in generating coherent and contextual text. However, despite their impressive capabilities, LLMs still struggle to provide accurate and informative responses to complex queries. This is where Retrieval Augmented Generation (RAG) systems come into play. RAG systems aim to enhance the performance of LLMs by incorporating a retrieval component that retrieves relevant information from a vast knowledge base. In this article, we'll delve into the world of RAG systems, exploring their architecture, advantages, and applications.

- How RAG Systems Work

- Advantages of RAG Systems

- Applications of RAG Systems

- Challenges and Future Directions

- Conclusion

- Call to Action

How RAG Systems Work

A RAG system consists of two primary components: a retriever and a generator. The retriever is responsible for fetching relevant information from a massive knowledge base, while the generator is an LLM that takes the retrieved information as input and generates a response.

# Simplified example of a RAG system using a retriever and generator

import torch

from transformers import AutoModelForSequenceClassification, AutoTokenizer

# Define the retriever model and tokenizer

retriever_model = AutoModelForSequenceClassification.from_pretrained("retriever-model")

retriever_tokenizer = AutoTokenizer.from_pretrained("retriever-tokenizer")

# Define the generator model and tokenizer

generator_model = AutoModelForSequenceClassification.from_pretrained("generator-model")

generator_tokenizer = AutoTokenizer.from_pretrained("generator-tokenizer")

def rag_system(input_query):

# Retrieve relevant information using the retriever

retriever_input = retriever_tokenizer.encode(input_query, return_tensors="pt")

retriever_output = retriever_model(retriever_input)

relevant_info = retriever_output["relevant_info"]

# Generate a response using the generator and retrieved information

generator_input = generator_tokenizer.encode(input_query + " " + relevant_info, return_tensors="pt")

generator_output = generator_model(generator_input)

response = generator_output["response"]

return response

Advantages of RAG Systems

RAG systems offer several advantages over traditional LLMs:

- Improved accuracy: By incorporating retrieved information, RAG systems can provide more accurate and informative responses.

- Enhanced knowledge coverage: RAG systems can retrieve information from a vast knowledge base, enabling them to cover a wider range of topics and domains.

- Increased robustness: The retriever component can help filter out irrelevant or misleading information, making RAG systems more robust to adversarial attacks.

Applications of RAG Systems

RAG systems have numerous applications in various domains:

- Question Answering: RAG systems can be used to improve question answering models, enabling them to provide more accurate and informative responses.

- Text Summarization: RAG systems can be applied to text summarization tasks, allowing them to generate more concise and relevant summaries.

- Chatbots and Conversational AI: RAG systems can be integrated into chatbots and conversational AI systems, enabling them to engage in more informed and contextual conversations.

# Example of using a RAG system for question answering

def question_answering(input_question):

rag_response = rag_system(input_question)

answer = extract_answer(rag_response)

return answer

Challenges and Future Directions

While RAG systems have shown promising results, there are still several challenges that need to be addressed:

- Scalability: RAG systems require large knowledge bases and computationally expensive retriever models, making scalability a significant challenge.

- ** Knowledge Base Construction**: Building and maintaining a vast, high-quality knowledge base is a daunting task.

- Evaluation Metrics: Developing evaluation metrics that accurately assess the performance of RAG systems is an open research question.

Conclusion

RAG systems have the potential to revolutionize the field of NLP by enhancing the capabilities of LLMs. By incorporating a retrieval component, RAG systems can provide more accurate and informative responses. While there are still challenges to be addressed, the advantages and applications of RAG systems make them an exciting area of research and development.

Call to Action

As the NLP community continues to advance the field of RAG systems, it's essential to stay updated on the latest developments and breakthroughs. Share your thoughts and ideas on how RAG systems can be applied to real-world problems, and let's work together to unlock the full potential of LLMs.