- Published on

Local Model Serving with TensorRT Optimizing Inference

- Authors

- Name

- Adil ABBADI

Introduction

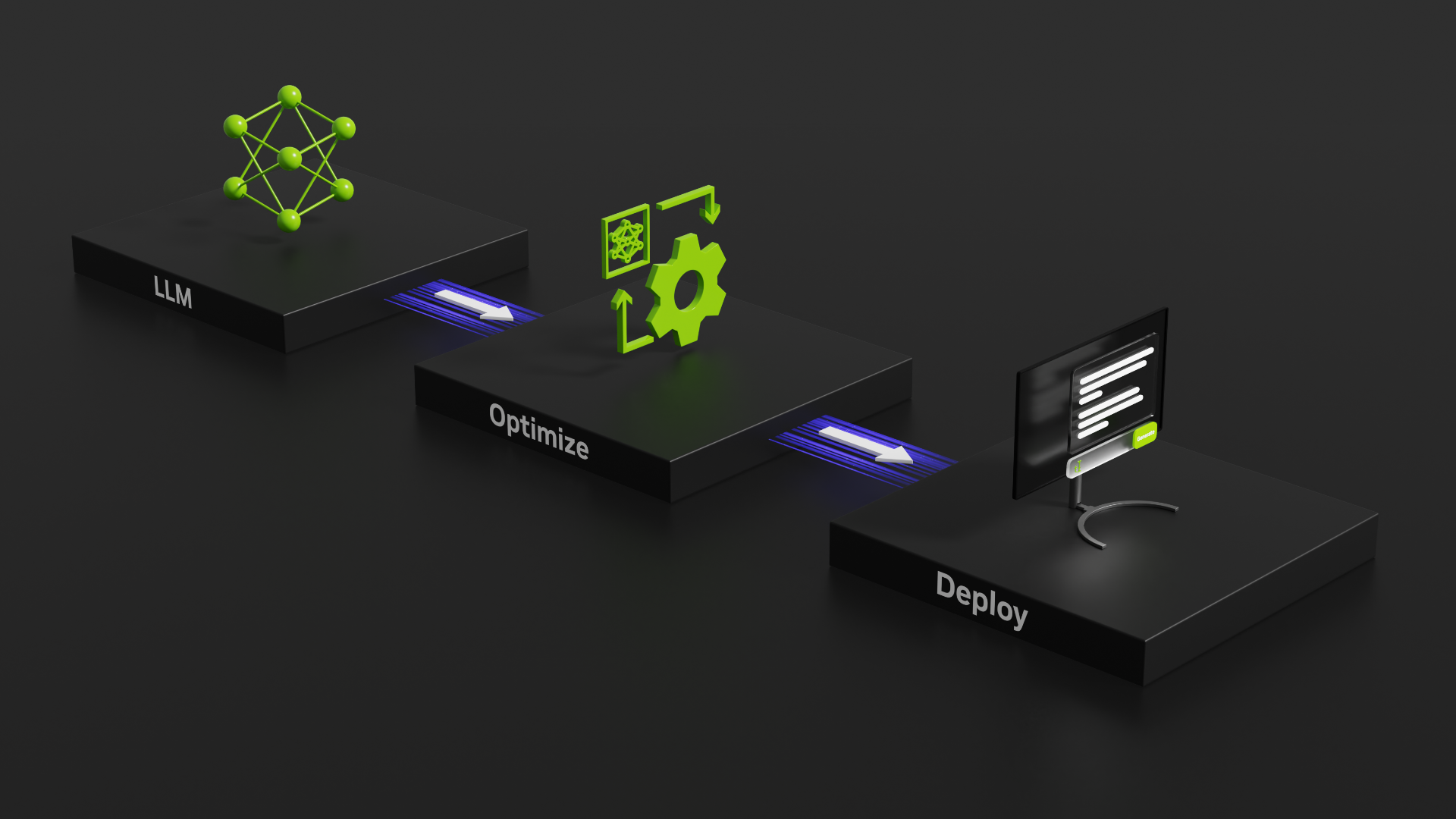

As machine learning models become increasingly complex and computationally intensive, deploying them efficiently is crucial for real-time inference. Local model serving with TensorRT is an optimal solution for optimizing inference and reducing latency. In this blog post, we'll delve into the world of TensorRT and explore how to locally serve machine learning models for faster and more efficient model deployment.

- What is TensorRT?

- Why Local Model Serving with TensorRT?

- Setting Up a Local Model Serving Environment with TensorRT

- Serving Models with TensorRT

- Optimizing Inference with TensorRT

- Conclusion

- Ready to Optimize Your Inference?

What is TensorRT?

TensorRT (Tensor Runtime) is an open-source software development kit (SDK) developed by NVIDIA. It's designed to optimize, compile, and execute machine learning models on various platforms, including Linux, Windows, and macOS. TensorRT provides a C++ API and Python bindings, making it accessible to a wide range of developers.

TensorRT's primary goal is to optimize inference performance by leveraging the underlying hardware's capabilities. It achieves this by:

- Model optimization: TensorRT optimizes the model architecture to reduce computational complexity and memory usage.

- Platform optimization: TensorRT optimizes the model for the target platform, taking advantage of hardware-specific features like GPU acceleration.

- Runtime optimization: TensorRT optimizes the inference engine to minimize latency and maximize throughput.

Why Local Model Serving with TensorRT?

Local model serving with TensorRT offers several benefits, including:

- Faster inference: TensorRT's optimization and compilation process enables faster inference times, making it ideal for real-time applications.

- Reduced latency: By serving models locally, you can eliminate the latency associated with remote inference services.

- Increased security: Local model serving ensures that sensitive data remains on-premises, reducing the risk of data breaches.

- Flexibility: TensorRT supports a wide range of frameworks, including TensorFlow, PyTorch, and Caffe, making it an ideal choice for diverse model deployments.

Setting Up a Local Model Serving Environment with TensorRT

To get started with local model serving using TensorRT, you'll need:

- Install TensorRT: Follow the official installation instructions for your platform.

- Prepare your model: Ensure your model is compatible with TensorRT. You can use popular frameworks like TensorFlow or PyTorch to develop and train your model.

- Convert your model: Use the TensorRT converter tool to convert your model into a TensorRT-compatible format.

Here's an example of converting a TensorFlow model using the TensorRT converter tool:

trtexec --onnx=<model.onnx> --output=<model.trt>

Serving Models with TensorRT

Once you've prepared your model and converted it to a TensorRT-compatible format, you can serve it using the TensorRT inference engine.

Here's an example of serving a model using the TensorRT Python API:

import trt

# Create a TensorRT runtime

runtime = trt.Runtime(trt.Logger(trt.Logger.INFO))

# Load the TensorRT model

with open("model.trt", "rb") as f:

model = runtime.deserialize_cuda_engine(f.read())

# Create an execution context

context = model.create_execution_context()

# Allocate memory for input and output tensors

input_tensor = trt.HostMemory(cuda_memcpy_kind=trt.cudaMemcpyKind.cudaMemcpyHostToDevice)

output_tensor = trt.HostMemory(cuda_memcpy_kind=trt.cudaMemcpyKind.cudaMemcpyDeviceToHost)

# Run inference

context.run(1, [input_tensor.device], [output_tensor.device])

# Retrieve the output

output_data = output_tensor.host

Optimizing Inference with TensorRT

TensorRT provides various optimization techniques to further improve inference performance. Some of these techniques include:

- Batching: Grouping multiple input samples together to reduce inference latency.

- FP16 precision: Reducing the precision of the model's weights and activations from FP32 to FP16, which can result in significant speedups.

- Int8 precision: Using 8-bit integer precision for model weights and activations, which can provide even further speedups.

- Tensor Cores: Leverage the Tensor Cores in NVIDIA GPUs to accelerate matrix multiplication and other compute-intensive operations.

Here's an example of enabling FP16 precision using the TensorRT Python API:

builder = trt.Builder(trt.Logger(trt.Logger.INFO))

builder.fp16_mode = True

# Create the TensorRT engine

engine = builder.build_cuda_engine(network)

Conclusion

Local model serving with TensorRT is an optimal solution for optimizing inference and reducing latency. By leveraging TensorRT's optimization and compilation capabilities, you can deploy machine learning models efficiently and effectively. Remember to explore the various optimization techniques provided by TensorRT to further improve inference performance.

Ready to Optimize Your Inference?

Start exploring the world of TensorRT and optimize your inference workflow today!

Learn more about TensorRT and its capabilities by visiting the official NVIDIA TensorRT website: https://developer.nvidia.com/tensorrt

Get started with local model serving using TensorRT and experience the benefits of optimized inference for yourself!