- Published on

Embeddings The Bridge Between Text and Vector Space

- Authors

- Name

- Adil ABBADI

Introduction

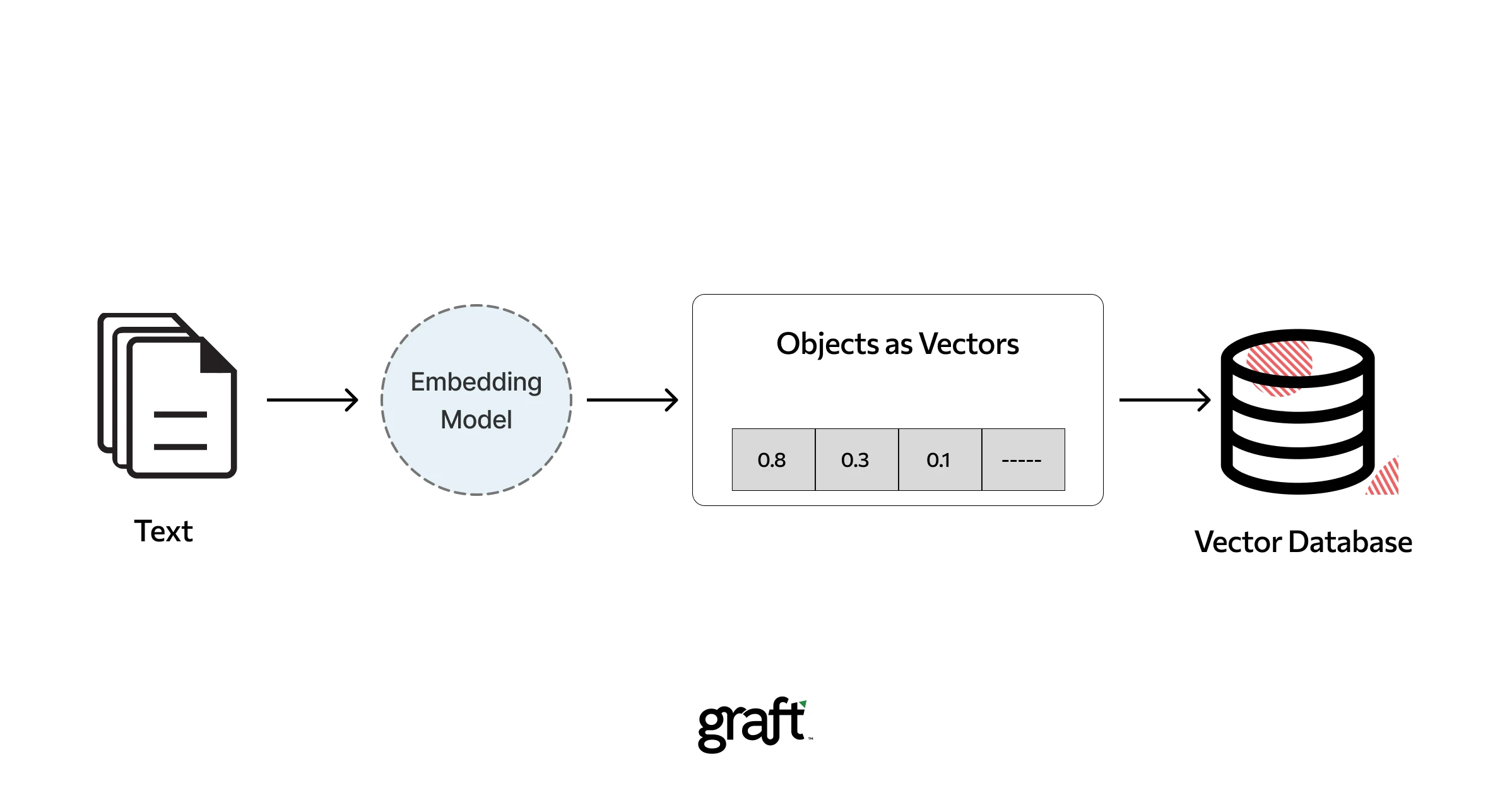

The rapid growth of natural language processing (NLP) has led to a surge in the development of innovative techniques to process and analyze text data. One fundamental concept that has revolutionized the NLP landscape is embeddings. Embeddings are a method of representing words, phrases, or documents as vectors in a high-dimensional space, enabling machines to understand and process text data. In this article, we'll delve into the world of embeddings, exploring how they bridge the gap between text and vector space, making it possible to analyze and process textual information.

- What are Embeddings?

- How are Embeddings Learned?

- Applications of Embeddings

- Conclusion

- Further Exploration

What are Embeddings?

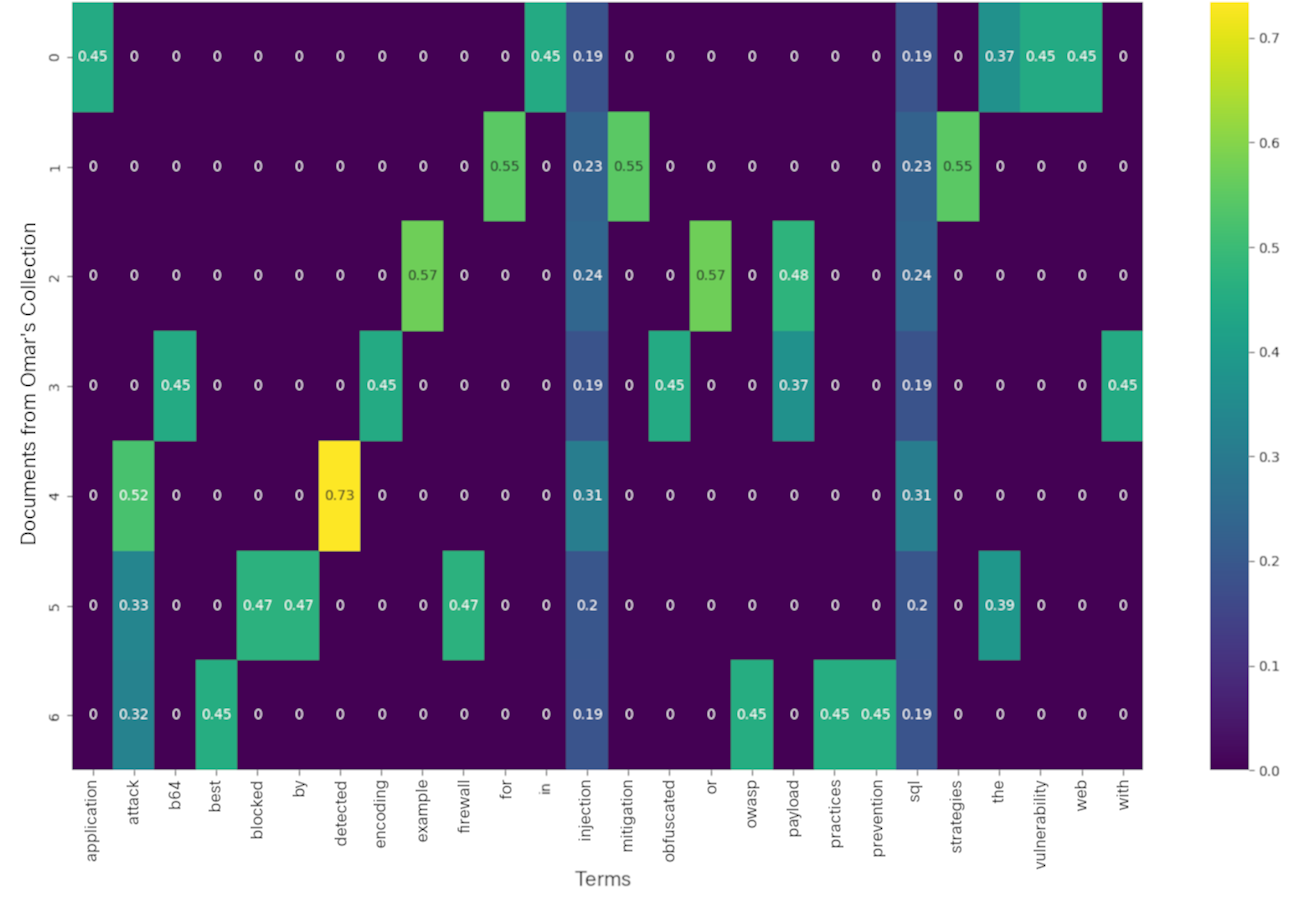

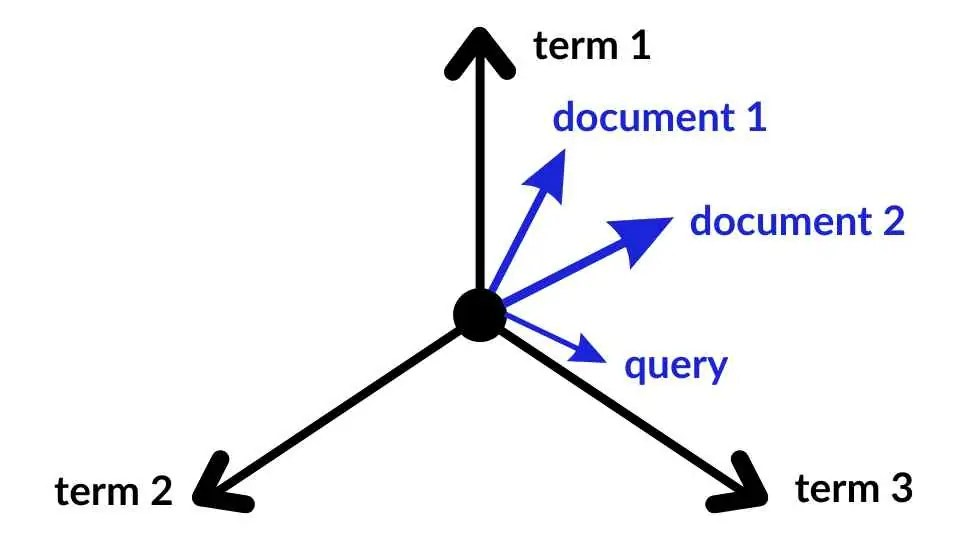

Embeddings are a way to represent words, phrases, or documents as vectors in a high-dimensional space. This allows machines to perform mathematical operations on text data, enabling tasks such as sentiment analysis, text classification, and language translation. The core idea behind embeddings is to map similar words or phrases to nearby points in the vector space, capturing their semantic meaning.

# Example of word embeddings using Gensim

from gensim.models import Word2Vec

sentences = [["cat", "say", "meow"], ["dog", "say", "woof"]]

model = Word2Vec(sentences, size=100, window=5, min_count=1)

print(model.wv.most_similar("cat")) # Output: [('dog', 0.8), ...]

How are Embeddings Learned?

Embeddings are typically learned using a self-supervised approach, where the model is trained on a large corpus of text data. The learning process involves two primary methods: Word2Vec and GloVe.

Word2Vec

Word2Vec is a neural network-based approach that learns to predict the context words surrounding a target word. The model consists of two architectures: Continuous Bag of Words (CBOW) and Skip-Gram.

# Word2Vec CBOW example

from gensim.models import Word2Vec

sentences = [["cat", "say", "meow"], ["dog", "say", "woof"]]

model = Word2Vec(sentences, size=100, window=5, min_count=1, sg=0)

print(model.wv.most_similar("cat")) # Output: [('dog', 0.8), ...]

GloVe

GloVe is a matrix factorization-based approach that represents words as vectors based on their co-occurrence statistics. The model learns to capture the relationships between words and their context.

# GloVe example

import glove

glove_file = 'glove.6B.100d.txt'

glove_dict = {}

with open(glove_file, 'r') as f:

for line in f:

values = line.split()

word = values[0]

vector = numpy.asarray(values[1:], dtype='float32')

glove_dict[word] = vector

print(glove_dict['cat']) # Output: [0.1, -0.2, ...]

Applications of Embeddings

Embeddings have numerous applications in NLP, including:

Text Classification

Embeddings enable text classification models to capture the semantic meaning of text data, improving the accuracy of classification tasks.

# Text classification example using scikit-learn

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

vectorizer = TfidfVectorizer()

X_train_vect = vectorizer.fit_transform(["This is a positive review", "This is a negative review"])

y_train = [1, 0]

clf = MultinomialNB()

clf.fit(X_train_vect, y_train)

print(clf.predict(vectorizer.transform(["This is an amazing product"]))) # Output: [1]

Sentiment Analysis

Embeddings facilitate sentiment analysis models to capture the emotional tone of text data, enabling the analysis of customer feedback, sentiment, and opinion.

# Sentiment analysis example using NLTK

import nltk

from nltk.sentiment.vader import SentimentIntensityAnalyzer

sia = SentimentIntensityAnalyzer()

print(sia.polarity_scores("I love this product!")) # Output: {'compound': 0.8439, ...}

Conclusion

In conclusion, embeddings are a fundamental concept in NLP that enable machines to understand and process text data. By representing words, phrases, or documents as vectors in a high-dimensional space, embeddings bridge the gap between text and vector space, making it possible to analyze and process textual information. With their numerous applications in text classification, sentiment analysis, and language translation, embeddings have revolutionized the NLP landscape.

Further Exploration

To dive deeper into the world of embeddings, explore the following resources:

- Word2Vec paper: "Distributed Representations of Words and Phrases and their Compositionality" by Mikolov et al. (2013)

- GloVe paper: "GloVe: Global Vectors for Word Representation" by Pennington et al. (2014)

- Embeddings tutorial: "Embeddings in Natural Language Processing" by NVIDIA (2020)

Happy learning!