- Published on

Demystifying MCP Server Building with Model Context Protocol

- Authors

- Name

- Adil ABBADI

Introduction

The rapid growth of artificial intelligence (AI) and machine learning (ML) has led to an unprecedented demand for efficient and scalable server development. One technology that's gaining prominence in this space is the Model Context Protocol (MCP) Server, which enables developers to build and deploy AI models with ease. In this article, we'll delve into the world of MCP Server and explore its features, benefits, and implementation.

- Understanding Model Context Protocol

- Building an MCP Server

- Advantages of MCP Server

- Conclusion

- Explore Further

Understanding Model Context Protocol

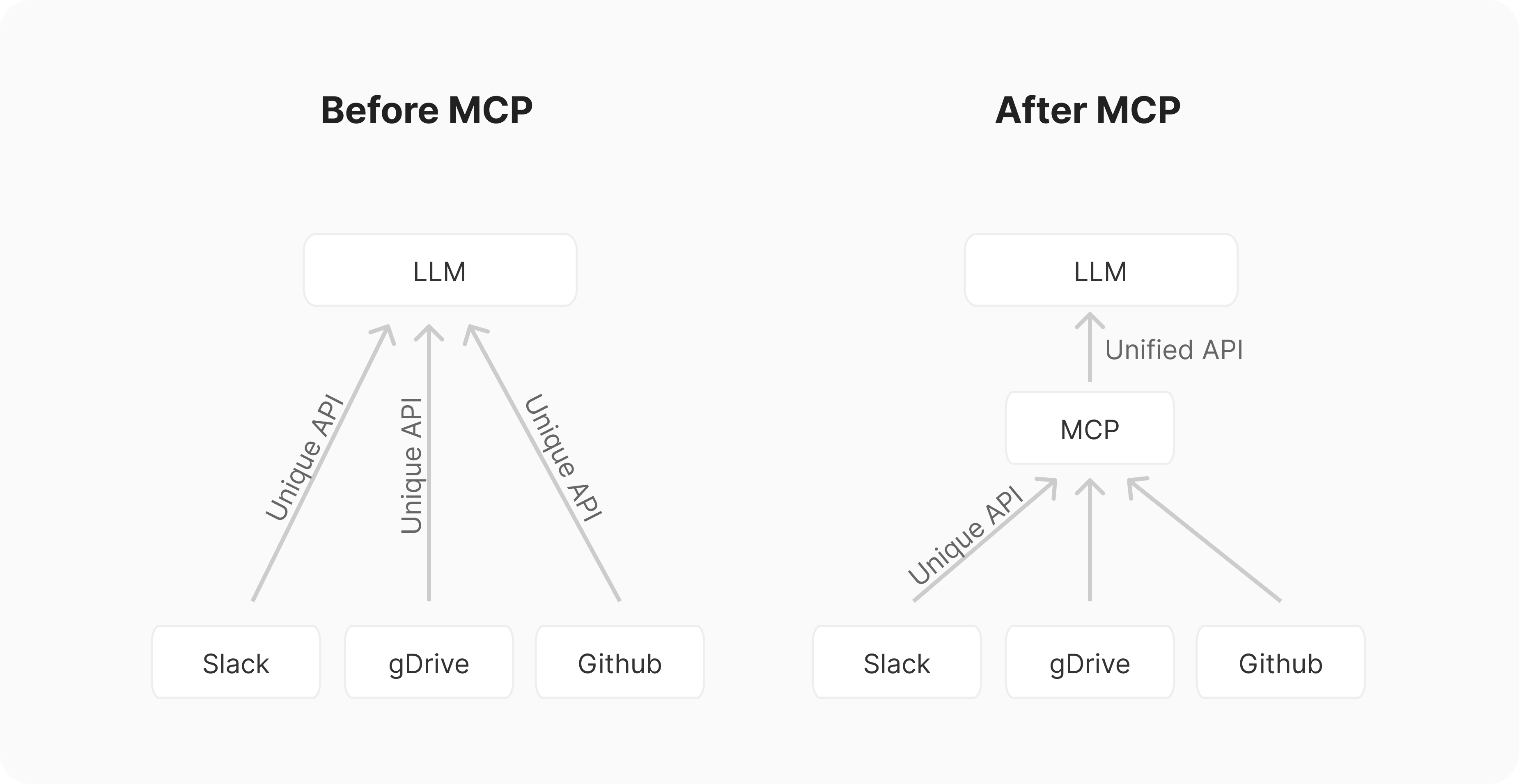

Model Context Protocol is an open-standard protocol designed to facilitate communication between AI models, clients, and servers. It provides a unified interface for model deployment, management, and inference, making it an ideal solution for large-scale AI deployments.

MCP enables developers to decouple model development from deployment, allowing for greater flexibility and scalability. By providing a standardized interface, MCP ensures that models can be easily swapped, updated, or replaced without affecting the underlying infrastructure.

Building an MCP Server

Building an MCP Server involves several key components:

1. Model Repository

A model repository is a centralized storage system that houses trained AI models. These models can be in various formats, such as TensorFlow, PyTorch, or Scikit-learn.

import os

from mcp_server.repository import ModelRepository

# Create a model repository instance

repository = ModelRepository(os.path.join(os.getcwd(), 'models'))

# Add a model to the repository

repository.add_model('my_model', 'path/to/model/file')

2. Server Configuration

The MCP Server requires configuration to define the model repository, inference engine, and other essential components.

server:

repository:

type: local

path: /path/to/model/repository

inference_engine:

type: tensorflow

port: 8080

3. Inference Engine

The inference engine is responsible for executing AI models and generating predictions. Popular inference engines include TensorFlow, PyTorch, and OpenVINO.

from mcp_server.inference import TensorFlowInferenceEngine

# Create a TensorFlow inference engine instance

inference_engine = TensorFlowInferenceEngine()

4. Client Integration

To interact with the MCP Server, clients can utilize the MCP client library, which provides a convenient interface for model inference and management.

from mcp_client import MCPClient

# Create an MCP client instance

client = MCPClient('http://localhost:8080')

# Perform inference on a model

response = client.infer('my_model', input_data)

Advantages of MCP Server

The MCP Server offers several benefits, including:

Scalability

MCP Server enables horizontal scaling, allowing developers to easily add or remove nodes to accommodate increasing workloads.

Flexibility

With MCP Server, developers can swap or update models without affecting the underlying infrastructure, ensuring greater flexibility and adaptability.

Standardization

MCP provides a standardized interface for AI model deployment, making it easier to integrate models from different frameworks and vendors.

Conclusion

In this article, we've explored the world of MCP Server and its benefits for AI and machine learning development. By providing a standardized interface for model deployment and management, MCP Server enables developers to build and deploy AI models with ease. As the AI landscape continues to evolve, the importance of MCP Server and its role in streamlining AI development will only continue to grow.

Explore Further

To learn more about MCP Server and its implementation, be sure to check out the official documentation and explore the growing community of developers and researchers working with this technology.