- Published on

Unlocking the Power of Vector Search Optimization for AI Applications

- Authors

- Name

- Adil ABBADI

Introduction

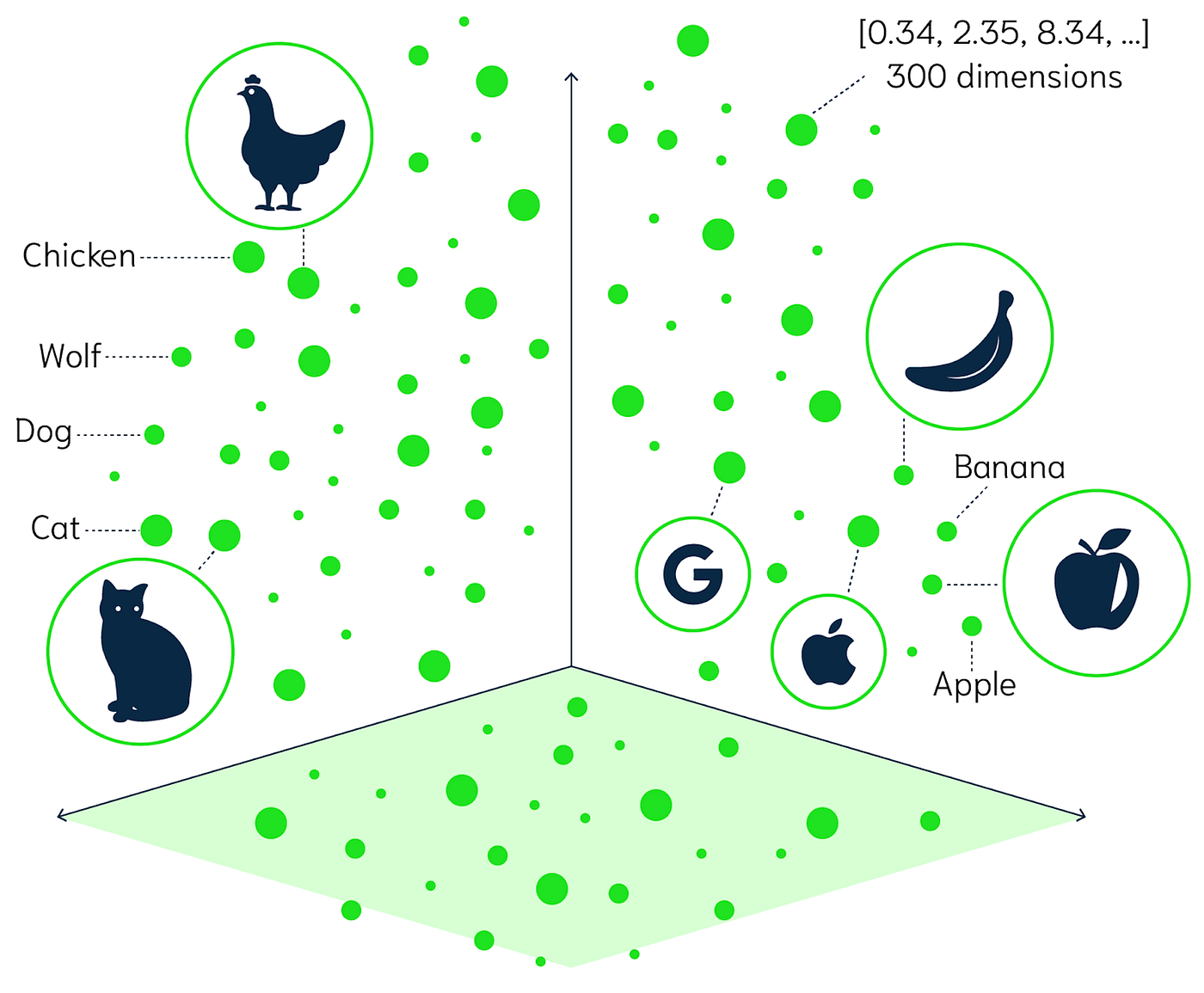

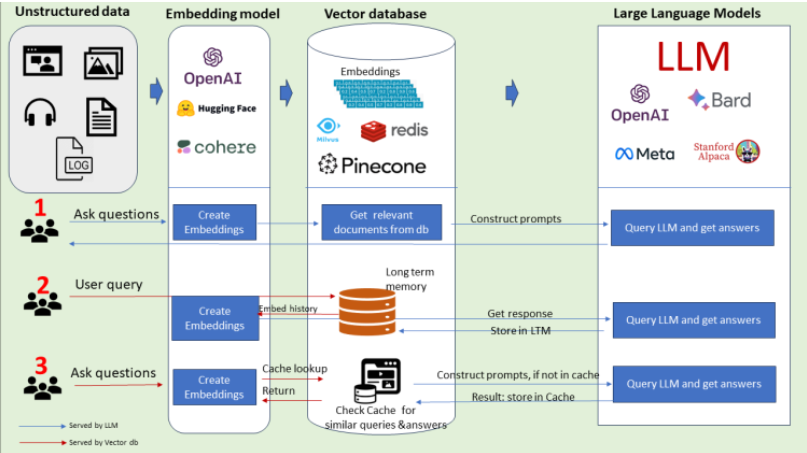

Vector search has become an indispensable component of various AI applications, including computer vision, natural language processing, and recommender systems. The ability to efficiently search for similar vectors in high-dimensional spaces is critical to the performance and accuracy of these systems. However, as the complexity and scale of AI applications continue to grow, the need for optimized vector search techniques becomes increasingly important. In this article, we'll explore the world of vector search optimization, discussing its significance, techniques, and applications in AI.

- The Importance of Vector Search Optimization

- Techniques for Vector Search Optimization

- Applications of Vector Search Optimization in AI

- Conclusion

- Further Exploration

The Importance of Vector Search Optimization

Vector search optimization is essential for several reasons:

- Scalability: As the size of datasets grows, brute-force search methods become impractical. Optimized vector search techniques enable efficient search in massive datasets.

- Accuracy: Suboptimal search methods can lead to decreased accuracy in AI applications. Optimized vector search techniques ensure that the most similar vectors are retrieved.

- Performance: Vector search optimization techniques can significantly reduce the computational resources and time required for search operations.

Techniques for Vector Search Optimization

Several techniques are employed to optimize vector search for AI applications:

1. Indexing Methods

Indexing methods, such as ball trees, k-d trees, and hierarchical navigable small world (HNSW) graphs, enable efficient vector search by organizing the dataset in a way that facilitates fast similarity searches.

import numpy as np

from annoy import AnnoyIndex

# Create an AnnoyIndex with 1000 items and 128 features

t = AnnoyIndex(128, 'angular')

# Add items to the index

for i in range(1000):

v = np.random.rand(128)

t.add_item(i, v)

# Build the index

t.build(10)

# Query the index

similar_items = t.get_nns_by_vector(v, 10)

2. Quantization Methods

Quantization methods, such as product quantization (PQ) and additive quantization (AQ), reduce the precision of vector representations, enabling faster and more efficient search operations.

import numpy as np

from faiss import IndexPQ

# Create a Faiss index with 128-dimensional vectors and 16 bytes per vector

index = IndexPQ(128, 16)

# Add vectors to the index

vectors = np.random.rand(1000, 128).astype('float32')

index.add(vectors)

# Query the index

distances, indices = index.search(vectors, 10)

3. Approximation Methods

Approximation methods, such as locality-sensitive hashing (LSH) and tree-based methods, provide an approximation of the exact search results, trading off accuracy for speed.

import numpy as np

from sklearn.preprocessing import normalize

from sklearn.neighbors import LSHForest

# Create an LSH forest index

lshf = LSHForest(random_state=42)

# Fit the index to the dataset

vectors = np.random.rand(1000, 128)

lshf.fit(vectors)

# Query the index

distances, indices = lshf.kneighbors(vectors, n_neighbors=10)

Applications of Vector Search Optimization in AI

Vector search optimization techniques have numerous applications in AI, including:

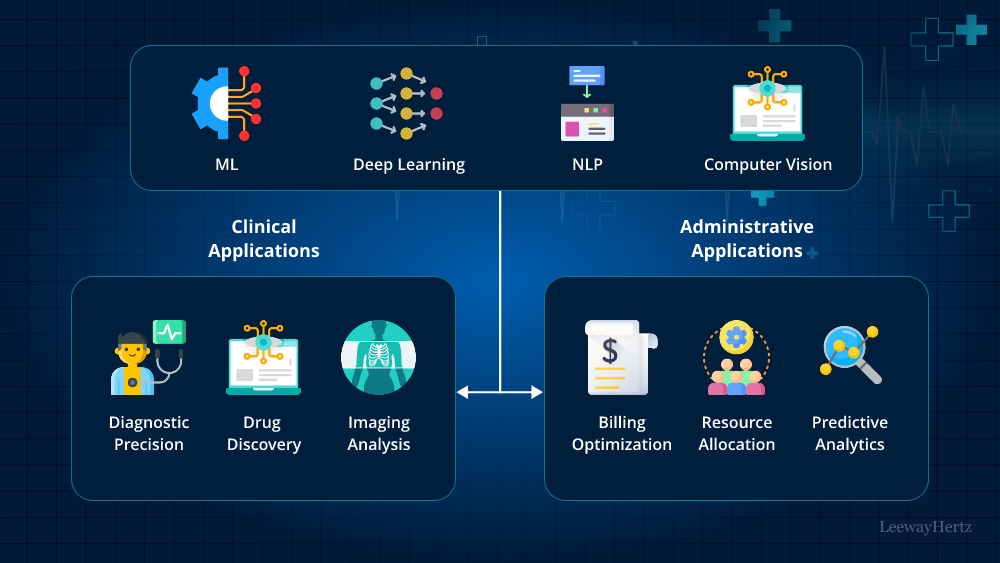

1. Computer Vision

Optimized vector search enables efficient image retrieval, facial recognition, and object detection in computer vision applications.

2. Natural Language Processing

Vector search optimization is crucial for efficient document retrieval, text classification, and topic modeling in natural language processing applications.

3. Recommender Systems

Optimized vector search enables personalized recommendations, enabling users to discover new products, services, and content.

Conclusion

Vector search optimization is a critical component of AI applications, enabling efficient similarity searches in high-dimensional spaces. By employing techniques such as indexing, quantization, and approximation, developers can significantly improve the performance and accuracy of their AI systems. As the complexity and scale of AI applications continue to grow, the importance of vector search optimization will only continue to increase.

Further Exploration

To delve deeper into the world of vector search optimization, we recommend exploring the following resources:

- Faiss: A library for efficient similarity search and clustering of dense vectors.

- Annoy: A library for efficient approximate nearest neighbors.

- HNSW: A library for efficient similarity search using hierarchical navigable small world graphs.

Remember to stay curious, keep exploring, and optimize your vector search for the next generation of AI applications!