- Published on

Unleashing the Power of BERT Understanding its Applications in AI

- Authors

- Name

- Adil ABBADI

Introduction

In recent years, the artificial intelligence (AI) landscape has witnessed a significant breakthrough with the introduction of BERT (Bidirectional Encoder Representations from Transformers). This revolutionary language model has reshaped the way machines understand and process human language, achieving state-of-the-art results in numerous natural language processing (NLP) tasks. In this article, we'll delve into the world of BERT, exploring its inner workings, applications, and the impact it has on the AI community.

- The Evolution of Language Models

- Introducing BERT: A Game-Changer in NLP

- BERT in Action: Applications and Use Cases

- Conclusion

- Unlocking the Potential of BERT: What's Next?

The Evolution of Language Models

Before diving into BERT, it's essential to understand the evolution of language models. Traditional language models, such as Word2Vec and GloVe, were limited in their ability to capture context and semantics. They relied on simplistic techniques, like bag-of-words or n-gram models, which failed to grasp the nuances of human language.

The introduction of Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks improved language modeling. However, these models still struggled with handling long-range dependencies and context.

Introducing BERT: A Game-Changer in NLP

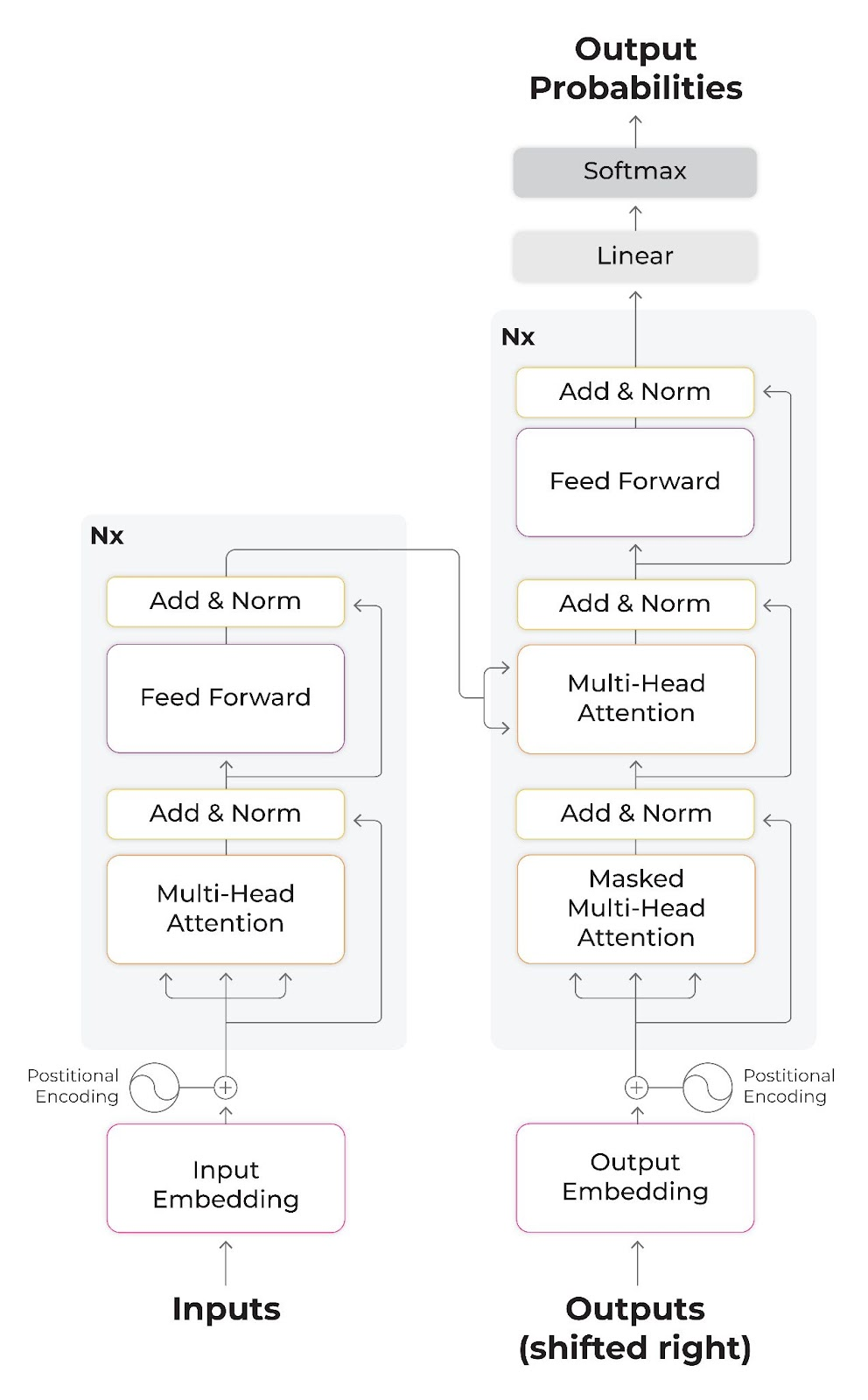

BERT, developed by Google in 2018, marks a significant departure from traditional language models. Built upon the Transformer architecture, BERT leverages a multi-layer bidirectional encoder to generate contextualized representations of words. This allows the model to understand the intricacies of language, capturing context, syntax, and semantics.

import torch

from transformers import BertTokenizer, BertModel

# Load pre-trained BERT model and tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained('bert-base-uncased')

# Encode input text

inputs = tokenizer.encode_plus("This is an example sentence",

add_special_tokens=True,

max_length=512,

return_attention_mask=True,

return_tensors='pt')

# Get contextualized embeddings

output = model(inputs['input_ids'], attention_mask=inputs['attention_mask'])

BERT in Action: Applications and Use Cases

BERT's versatility and accuracy have led to its widespread adoption in various NLP tasks. Some notable applications include:

Sentiment Analysis

BERT-based models have achieved state-of-the-art results in sentiment analysis tasks, outperforming traditional machine learning methods. By fine-tuning BERT on labeled datasets, it can learn to recognize sentiment patterns and accurately classify text as positive, negative, or neutral.

import torch

from transformers import BertForSequenceClassification, BertTokenizer

# Load pre-trained BERT model and tokenizer for sequence classification

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=3)

# Encode input text

inputs = tokenizer.encode_plus("I love this product!",

add_special_tokens=True,

max_length=512,

return_attention_mask=True,

return_tensors='pt')

# Get sentiment classification output

output = model(inputs['input_ids'], attention_mask=inputs['attention_mask'])

Question Answering

BERT has also shown remarkable performance in question answering tasks, such as SQuAD and TriviaQA. By leveraging its contextual understanding, BERT-based models can accurately extract answers from large texts.

Language Translation

BERT's ability to capture language representations has also made it a valuable tool for machine translation tasks. By fine-tuning BERT on parallel corpora, it can learn to generate accurate translations.

Conclusion

BERT has undoubtedly revolutionized the field of NLP, offering a powerful tool for understanding and processing human language. Its applications are vast, ranging from sentiment analysis to language translation. As researchers continue to push the boundaries of BERT, we can expect to see even more innovative applications in the AI landscape.

Unlocking the Potential of BERT: What's Next?

With BERT, we're just scratching the surface of what's possible in AI. As the NLP community continues to explore and refine this technology, we can expect to see even more remarkable breakthroughs. From enhancing language translation to developing more accurate chatbots, the possibilities are endless. Stay tuned for the next wave of BERT-powered innovations that will shape the future of AI.