- Published on

Navigating the Ethics of AI A Deep Dive into AI TRiSM

- Authors

- Name

- Adil ABBADI

Introduction

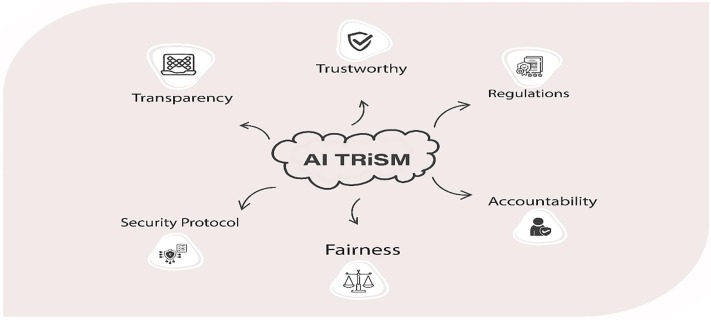

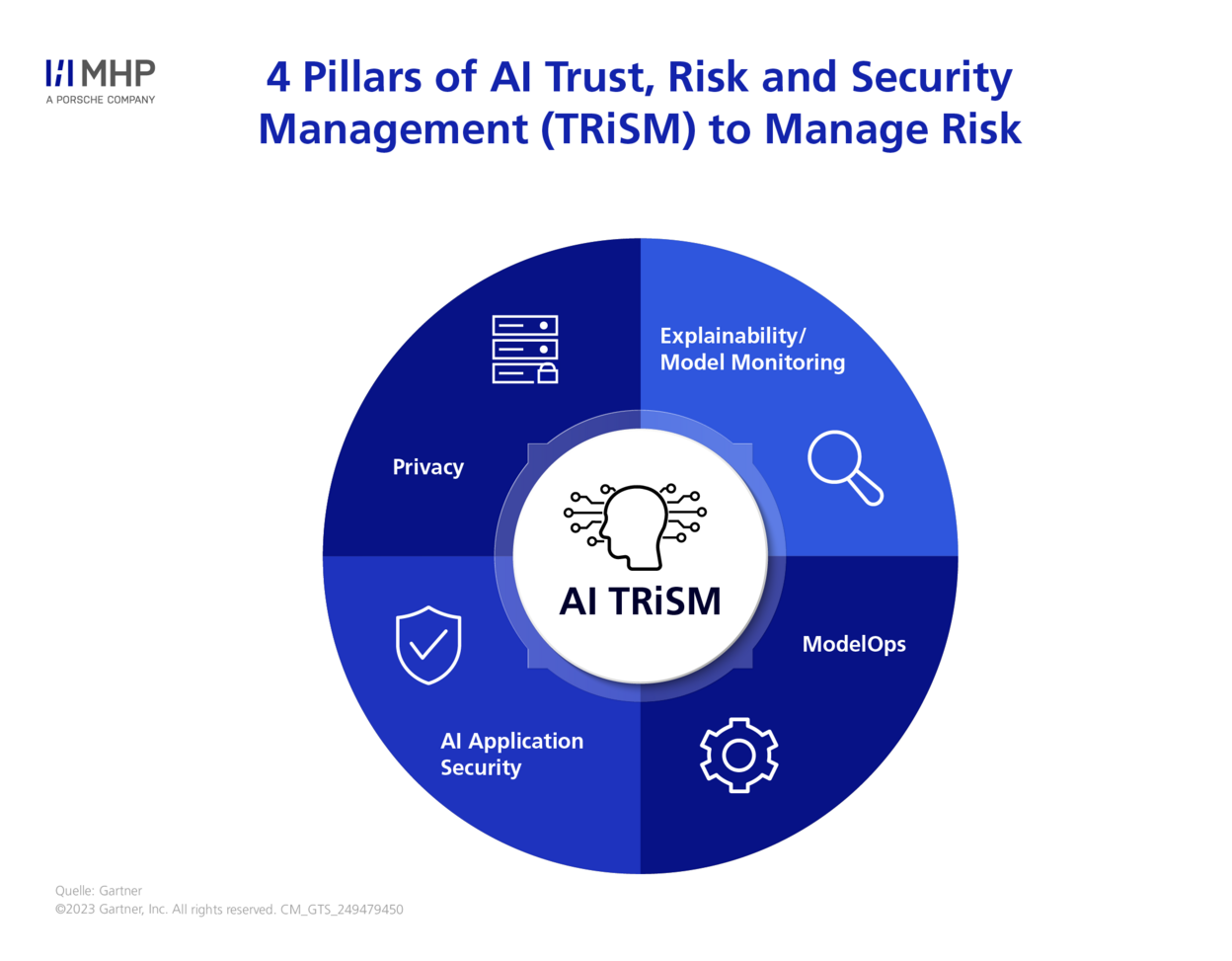

The rapid advancement of Artificial Intelligence (AI) has brought about unprecedented opportunities for innovation and growth. However, as AI systems become more pervasive, the need to address the ethical implications of their development and deployment has become increasingly pressing. One crucial aspect of responsible AI development is managing trust, risk, and security – a trifecta that forms the backbone of the AI TRiSM framework.

- Trust in AI Systems

- Managing Risk in AI Systems

- Securing AI Systems

- Conclusion

- Further Exploration and Action

Trust in AI Systems

Trust is a multifaceted concept in the context of AI. It encompasses the reliability, transparency, and accountability of AI systems. In order to establish trust, AI developers must prioritize the following aspects:

- Transparency: AI systems should be designed to provide clear explanations for their decision-making processes and outcomes.

- Accountability: Developers and users must be held accountable for the actions and consequences of AI systems.

- Reliability: AI systems should be designed to perform consistently and accurately, even in unexpected scenarios.

# Example code snippet demonstrating transparency in AI decision-making

import interpretable_ai as ia

model = ia.TransparencyModel()

explanation = model.explain_decision(input_data)

print(explanation)

Managing Risk in AI Systems

Risk management is a critical component of AI TRiSM. AI systems can pose risks to individuals, organizations, and society as a whole. To mitigate these risks, developers should:

- Identify potential risks: Conduct thorough risk assessments to identify potential vulnerabilities and weaknesses in AI systems.

- Implement risk mitigation strategies: Develop and implement strategies to mitigate identified risks, such as robust testing and validation protocols.

- Continuously monitor and evaluate: Regularly monitor and evaluate AI systems to ensure they operate within designated risk boundaries.

Securing AI Systems

Security is a paramount concern in AI development, as AI systems can be vulnerable to attacks and data breaches. To ensure the security of AI systems, developers should:

- Implement robust security protocols: Design and implement robust security measures, such as encryption and secure data storage, to protect AI systems and data.

- Conduct regular security audits: Regularly conduct security audits and penetration testing to identify and address vulnerabilities.

- Ensure secure data handling: Ensure secure handling and processing of sensitive data, adhering to data protection regulations and standards.

# Example code snippet demonstrating secure data encryption

import cryptography

encrypted_data = cryptography.encrypt(sensitive_data, encryption_key)

print(encrypted_data)

Conclusion

The AI TRiSM framework provides a comprehensive approach to managing trust, risk, and security in AI systems. By prioritizing these aspects, developers can ensure the responsible development and deployment of AI systems that benefit society as a whole.

Further Exploration and Action

As we continue to navigate the complexities of AI development, it is essential to stay informed about the latest advancements and best practices in AI TRiSM. We encourage developers, researchers, and organizations to explore the AI TRiSM framework further and adopt its principles to ensure the responsible development of AI systems that benefit humanity.

Remember, responsible AI development is a collective effort. Let's work together to create a future where AI is developed and deployed with trust, risk, and security at its core.